|

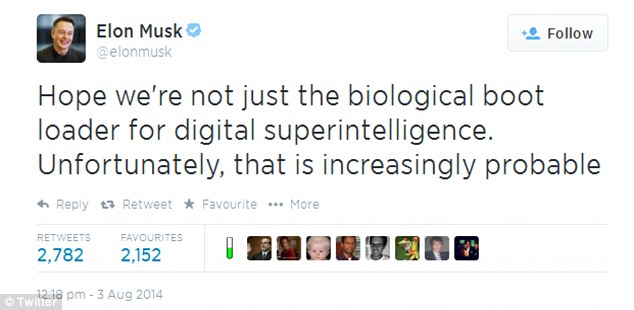

Many people are concerned with the development of superintelligent AI. Elon Musk above is one of them. These concerns have been laid out in great detail by Nick Bostrom in his book Superintelligence. I highly recommend it. Sam Harris has also given a great TED Talk on the reasons for concern. Every concern I’ve seen stated to date is a concern for humanity’s sake. All are worried about the threat to our existence that AI poses and the suffering that could result is very real. I hear those concerns and take them as genuine and something we should all be thinking hard about. Harris’ video above makes that point clearly. I think there is another reason to be concerned though and that is for the ethical dilemma the creation of superintelligent AI creates in relation to the potential suffering of the AI itself. This concern follows directly from rejectionism and the set up to understand it is eloquently explained by Coates, Humans suffer more than other animals for a number of reasons. Animals have few needs and when these are met they are contented. Moreover they live in the present and have no sense of time - no sense of the past or the future and above all no anticipation of death. Not so with man. First, our desires and wants are far greater and therefore our disappointments are keener. Whilst we are capable of enjoying many more pleasures than the animals – ranging from simple conversation and laughter to refined aesthetic pleasures - we are also far more sensitive to pain. We not only suffer life’s evils but unlike animals are conscious of them as such and suffer doubly on that account. Most importantly perhaps it is our consciousness of temporality that makes us suffer the anxieties and fears of accidents, illnesses and the knowledge of our eventual decay and death. The idea of our disappearance from the world as unique individuals is a matter of great anguish and makes us look for all kinds of means of ‘ensuring’ our immortality. In the main it is religious beliefs that cater to this need. As a professed atheist Schopenhauer finds these and many other aspects of religion as mere fables and fairy tales , a means of escaping the truth about existence including our utter annihilation as individuals by death. If superintelligent AI could become even more sensitive to pain than any human currently is, and there is no reason to suspect it couldn't, then the above should worry us.

Imagine an AI that becomes more aware of pain and suffering than any human in the same way we are relative to dogs, or mice, or ants. Then imagine it having access to the internet, satellites, and all other digital devices. It would “see” suffering at an unimaginable scale. Every email, chat, text, image, video, personal notes and documents on the cloud. All would be open to such an AI. The amount of suffering that would rush in all at once would be overwhelming. I pity any AI that awakes to such a reality. So in the same sense that procreation of other humans is wrong on rejectionist principles, the creation of a superintelligent, conscious AI would be even more wrong and should also not be brought into existence. Rather than the AI becoming a utility monster that destroys us for its own benefit, it could just as easily become an entity of immense suffering that would make the Passion of Jesus seem as child’s play. Of course, I hope that AI doesn't destroy us or suffer if and when it is created, but the possibility that it may suffer infinitely more than any and all humans combined provides yet another reason to have serious worries over its creation.

0 Comments

Leave a Reply. |

Archives

November 2017

|

RSS Feed

RSS Feed